Blind Vision

Haptics Feedback Exploration

Introduction and background to the work

How can video games work when you can’t see anything or very little? How well is controller feedback suited to enrich games with information or to replace an information channel? Is it possible to make games that do without the visual channel (as far as possible) but are still playable? These are a few initial questions that can be used to describe this project “Blind Vision”.

It all started with a small idea that I realised in two days in June 2020: You have to escape from a labyrinth, and you can see almost nothing. The labyrinth is tile-based and in each tile there is an orb that can be collected, which makes a sound when touched at the destination, which is pitched differently depending on the distance. In the labyrinth it is completely dark, only when collecting the orbs there is a short illumination.

Figure 1: A picture showing an illuminated circle from the labyrinth in the project ‘Hear the Exit’.

In addition, I have participated in two game jams in the last two years where accessibility was a separate evaluation criteria. From my point of view, the exciting thing about accessibility features is that most of the time people benefit who don’t need these features at all. For example, a traffic light that generates sounds as soon as it turns green also offers sighted people the possibility of not having to watch the traffic light with their eyes while waiting. It is important for me to explicitly emphasise this double benefit here, as I personally do not have access to such communities and therefore cannot base this work on access to them. Practically, this has an impact on the results of this project because all tests were therefore carried out with sighted people. Nevertheless, from the absolute approximation, i.e. the approximation without the visual channel, insights can be gained for all games that use controller feedback.

Motivation

Controller feedback or haptics are often in need of development because good design tools are lacking and they are seen more as a nice feature than as a communication channel. Yet haptics offer incredibly close access to the motor function that triggers the actions.

Figure 2: Human-Computer Interaction Model

Figure 3: Vibrations in the hand are close to the fingers and muscle memory can reduce cognitive load when feedback is learned.

Ultimately, I hope to gain insights into the versatility of haptics from this project, and thus contribute to making haptics more usable or designable. I am simply interested in gaining insights that will help developers to better use haptics in their games, and thereby improve the experience.

Scope of the Work

Components of this bachelor thesis are this book, a blog post, a short video introducing the key findings and giving a brief overview of the project, a short video about each of the four individual prototypes, as well as the prototypes themselves and the data from the first three prototypes.

- Textbook

- Blogpost

- Overview video

- 4 prototype explanation videos

- 4 prototypes

- 2 tools

- Test data

Planning and Workflow

The project was roughly divided into four parts. First, the signal differences of various controllers were examined, the sensitivity of different people to the haptic feedback was tested, and then interpretations and associations were explored. With the results of the first three blocks, a game was created in the last part that communicates exclusively with haptic and auditory signals.

The division into smaller working blocks was done in order to be able to react iteratively and flexibly to the findings of the prototypes, so as not to knowingly ride a dead horse. As soon as a prototype was testable, it was falsified.

Figure 4: The milestone planning of the project is oriented towards the individual prototypes, but also what data, where is generated and where tools are needed.

Controller Setup

Figure 5: All controllers in this image have been tested. In the first row are a Switch Pro Controller and the Valve Controller of the Steam Link, in the second row a DualSense and a Dualshock for the Playstation 5 and 4 and in the last row are an X Box One Controller and an X Box 360 Controller.

Controllers have classically been used with their consoles. Since the last two console generations, however, they can also be used on computers and are widespread. They are popular because the control schemes, i.e. the controls of the games on controllers are more limited due to their smaller number of buttons on the one hand, but on the other hand they allow different controls due to the two analogue triggers and their two analogue sticks. In addition, all controllers can vibrate in their handles. As a rule, a harder vibration is built into the left handle and a lighter one into the right. By allowing both grips to vibrate, this can be used for more complex movements. Sony, Microsoft and Nintendo build similar controllers in principle, but they differ in detail. For example, different button names are used, different feedback systems, but also additional features such as a gyroscope on the Nintendo or the touchpad and adaptive triggers on the Sony controllers.

The haptics, i.e. the vibration of the controllers, differ depending on the installed actuator type and the specific driver. However, the basic technology in all controllers is one of the following.

ERM Feedback

ERM is the abbreviation for “Eccentric Rotating Mass” and is the classically used system. It generates a two-dimensional vibration. The strength as well as the volume of the vibration is defined by the speed. The frequency determines how the vibration feels. The frequency is more or less the sound of the vibration and is defined by the mass and its configuration. The uneven distribution of the mass creates an imbalance that we perceive as a vibration.

Figure 6: Schematic representation of an eccentric rotating mass actuator and the developed forces.

LRA Feedback

LRA stands for “Linear Resonance Actuator” and is used by the Dual Sense, Switch Pro and Valve controllers. Unlike the ERMs, these produce a one-dimensional vibration. As with ERMs, the strength is speed and frequency dependent. However, the vibration is generated through a linear motion. This means that the vibration is most perceptible in the mounted direction. An advantage of LRAs, however, is the more precise control and the more controllable, construction-dependent effect. However, testers generally found the feedback to be weaker overall compared to ERMs.

Figure 7: Schematic representation of a linear resonance actuator and the developed vibration direction.

Engine Selection

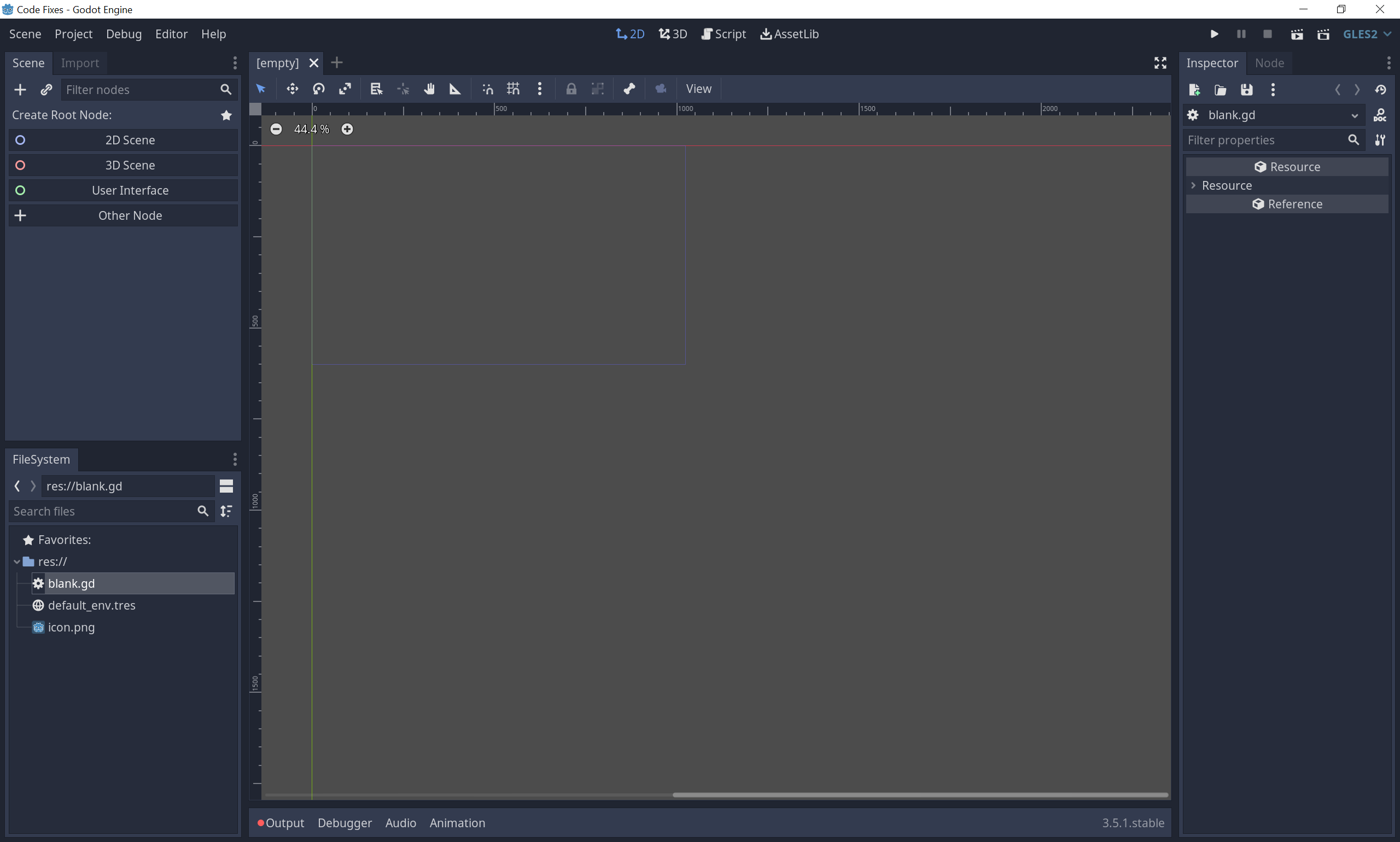

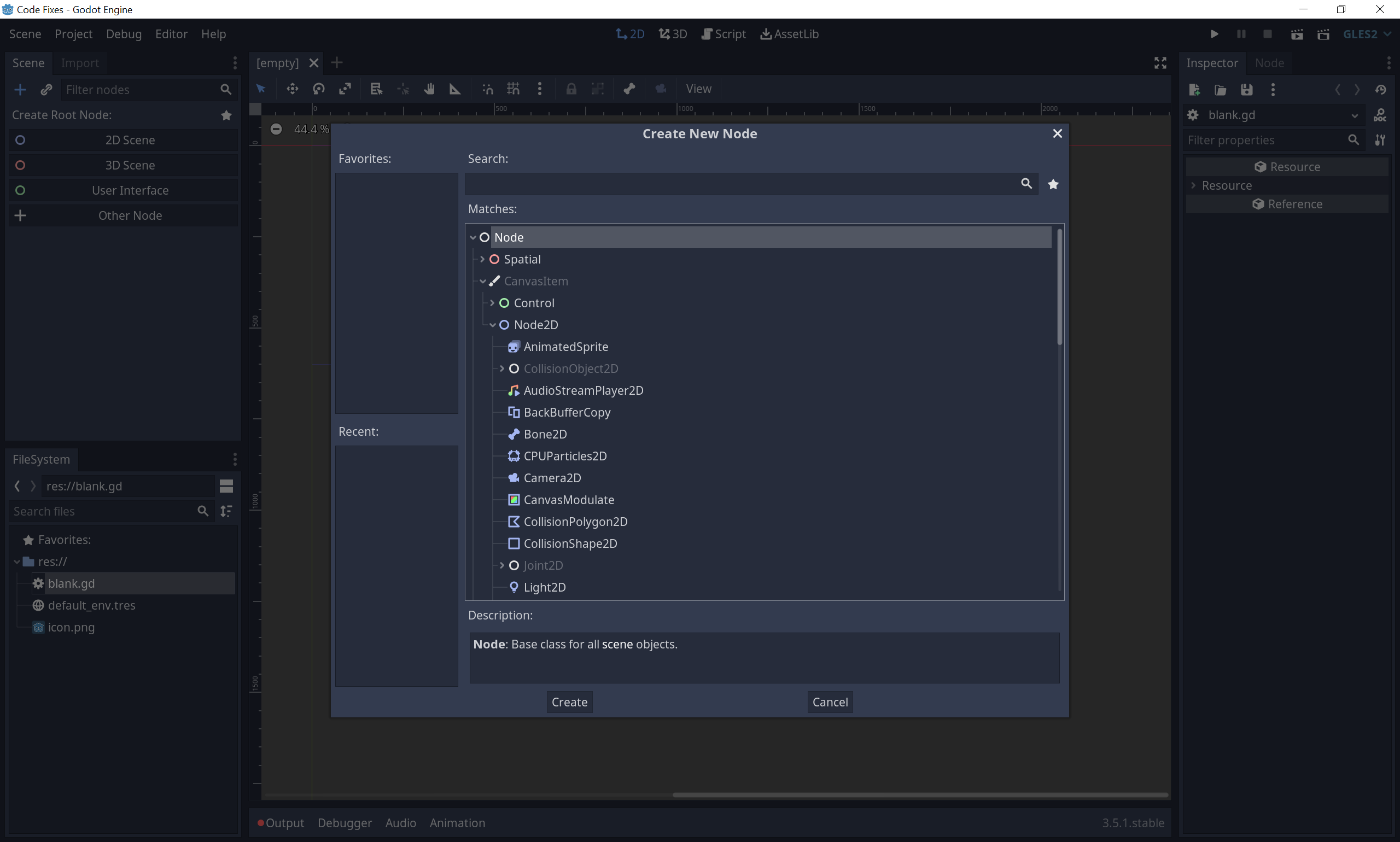

The choice was Godot. Neither Godot nor Unreal nor Unity have a fully functioning controller support. Therefore, a third party library had to be used anyway. I finally chose Godot because I can iterate in the engine the fastest and know its internals the best.

In Godot, programming is done with GDScript, a C++ based but TAB indented language like Python, but unlike Python, it is dynamic but strongly typed, and has Duck Typing. Since the language was designed for Godot, it has some features that allow very close integration with the engine.

Godot uses OOP for the functionality of the nodes and accordingly has one function per node unlike Unity where a GameObject can have any number of components. This allows for a better UX in the scene structure, which in turn has a positive effect on the iteration time. A disadvantage, on the other hand, is the potentially more expensive data structure, as the Scenetree cannot be edited well in a data-oriented way. However, this is only a problem once a certain mass of data is reached. A mass that was not reached in this project. The biggest bottleneck was always the time available, which made the iteration speed more important.

Figure 8: Screenshot of the Godot interface.

Figure 9: Since Godot bundles functions in nodes, it is enough to add nodes to extend the project. This improves the UX and speeds up the workflow.

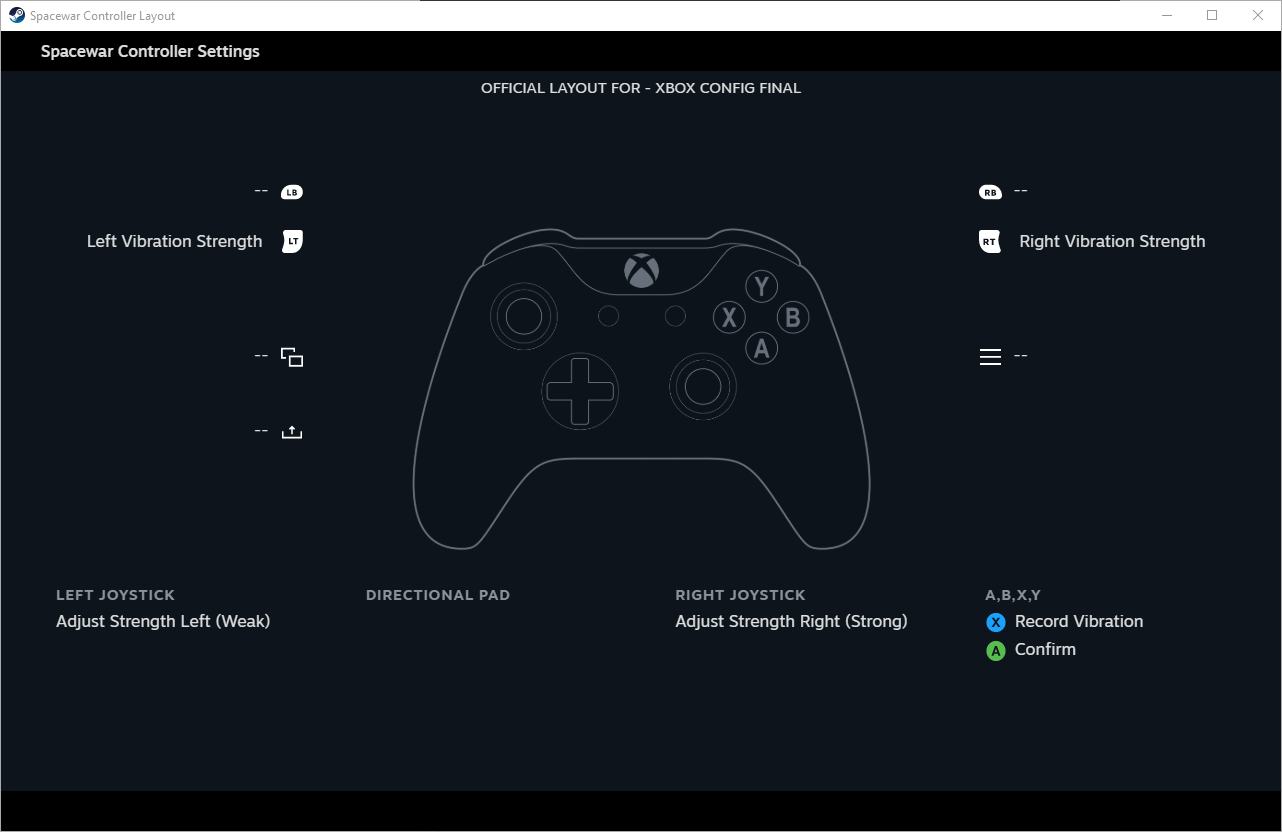

Steam Input

Figure 10: Screenshot of the controller bindings for the pong and the tools.

DISCLAIMER: I am abusing the Steam SDK at this point, as I don’t plan to release the prototypes on Steam. The SDK requires an App Id, but you can use the sample App Id 480 to bypass this in order to run tests with the SDK.

To force the SDK to a specific App Id, there must be a steam_appid.txt with the id in the root directory. The sample app has custom controller bindings to demonstrate Steam Input. However, the default values can be overridden by the running app at runtime by calling setInputActionManifestFilePath(filePath).

Controller Setup:

var controllers := []

var current_controller: Controller = null

onready var steam := Steam.Steam as Steam

func _ready() -> void:

randomize()

steam.inputInit(false)

# Ensure VDF file is always read from the FS.

var path := ""

if OS.has_feature("editor"):

path = ProjectSettings \

.globalize_path("res://steam_input_manifest.vdf")

else:

path = OS.get_executable_path().get_base_dir() \

.plus_file("steam_input_manifest.vdf")

# This line forces our custom defined input actions

# instead of the ones in the depot.

steam.setInputActionManifestFilePath(path)

# We poll the controllers. That's the reason the

# controller detection is super wonky. Don't poll

# too often or you'll end with Steam crashing.

steam.runFrame()

var steam_controllers := \

steam.getConnectedControllers() as Array

if steam_controllers.size() == 0:

print("Nothing connected")

return

for device in steam_controllers:

# We create and add our custom controller objects

# here to the tree to enable in- and output.

var controller := Controller.new() \

.start(device) as Controller

get_tree().root.call_deferred("add_child", controller)

controllers.push_back(controller)

yield(get_tree(), "idle_frame")

# Any gameplay relevant events are connected here.

# In this case it's pressing confirm (A on Xbox).

controller.connect("confirm_changed", self, \

"_on_confirm_changed", [controller])

Since Godot actually expects input via the input singleton, you have to retrieve the input manually from Steam. It would be possible to use Input.parse_input_event(event) to distribute the input through the normal Godot system, but I didn’t do that during the project. So instead I wired the controllers I added to the SceneTree so they updated once per frame directly to the callbacks. It is possible in Godot to generate signals at runtime, which I used to poll all the Steam Input events based on the Steam Input Action Manifest file, which also sets the default values above, and emit them back into game code.

Parsing VDF Files:

class_name VdfParser

extends Reference

static func parse_file(path: String) -> Dictionary:

var file := File.new()

if file.open(path, File.READ) != OK:

print("Failed to open %s" % path)

return {}

return parse(file)

static func parse(file: File) -> Dictionary:

var data := {}

var stack := [data] # Stack of Dicts

var key := ""

var line := file.get_line().strip_edges()

while file.get_position() < file.get_len():

var comment_start := line.find("//")

if comment_start != -1:

line = line.substr(0, comment_start).strip_edges()

if line.empty():

line = file.get_line().strip_edges()

continue

if line[0] == "{":

var prev = stack.back()

stack.push_back({})

prev[key] = stack.back()

key = ""

line.erase(0, 1)

line = line.strip_edges()

continue

elif line[0] == "}":

stack.pop_back()

line.erase(0, 1)

line = line.strip_edges()

continue

elif line[0] == "\"":

var length := line.find("\"", 1)

if length > 0:

length -= 1

var word := line.substr(1, length)

if key.empty():

key = word

else:

stack.back()[key] = word

key = ""

if length == -1:

line = ""

else:

line = line.substr(length + 2).strip_edges()

continue

else:

print("Parsing failed. Line: '%s'" % line)

line = ""

return data

But the main reason for this set-up was the controller output. The SDK provides a function for it: triggerVibration(handle, left, right). This means that you have to do the timing for the output yourself, but also have full freedom in how you design it. With the developed capability in the end to mix several effects and to send live signals from the gameplay, there are four methods to trigger vibrations. One for effects (see tool), one to play a strength for a certain time, one to play the vibration of two curves and a time and one to play only this one frame, a vibration. To do this, first the input is polled and distributed, then the internal vibration state is reset and then all vibration method calls that are cached as coroutines are executed to then send the vibration to the controller.

Controller Support:

class_name Controller

extends Node

signal ticked(delta_time)

var actions := {} # parsed from steam input manifest

var handle := 0

var type := ""

var state := {}

var previous_state := {}

var vibrate_counter := 0

var weak_strength: float = 0

var strong_strength: float = 0

func _vibrate(weak: float, strong: float) -> void:

weak_strength = max(weak, weak_strength)

strong_strength = max(strong, strong_strength)

vibrate_counter += 1

func vibrate_once(weak: float, strong: float) -> void:

yield(self, "ticked")

_vibrate(weak, strong)

func vibrate(weak: float, strong: float, length: float) \

-> void:

var t := 0.0

var delta := 0.0

yield(self, "ticked")

while t <= length:

_vibrate(weak, strong)

delta = yield(self, "ticked")

t += delta

func vibrate_curve(weak: Curve, strong: Curve, \

length: float) -> void:

var t := 0.0

var delta := 0.0

yield(self, "ticked")

while t <= length:

var w := \

clamp(weak.interpolate_baked(t / length), 0, 1)

var s := \

clamp(strong.interpolate_baked(t / length), 0, 1)

_vibrate(w, s)

delta = yield(self, "ticked")

t += delta

func vibrate_effect(effect: ForceFeedbackEffect) -> void:

yield(vibrate_curve(effect.weak_right_motor, \

effect.strong_left_motor, effect.length), \

"completed")

func _process(delta: float) -> void:

_poll_actions()

vibrate_counter = 0

weak_strength = 0

strong_strength = 0

# Update curves

emit_signal("ticked", delta)

steam.triggerVibration(handle, clamp(int(round( \

strong_strength * 65535)), 0, 65536), \

clamp(int(round(weak_strength * 65535)), 0, 65536))

func _poll_actions() -> void:

previous_state = state.duplicate(true)

for action_set in actions:

steam.activateActionSet(handle, \

actions[action_set]["id"])

for action in actions[action_set] \

.get("StickPadGyro", {}):

var data = steam.getAnalogActionData(handle, \

actions[action_set]["StickPadGyro"] \

[action]["id"])

if !data["active"]:

continue

# see https://partner.steamgames.com/doc/api/

# ISteamInput#EControllerSourceMode

if !(data["mode"] in [5, 7]):

# relative mode (joystick mouse)

state[action] = Vector2(0, 0)

state[action] = state.get(action, Vector2(0, 0)) \

+ Vector2(data["x"], data["y"])

if state[action].length_squared() < 0.2 * 0.2:

state[action] = Vector2()

for action in actions[action_set] \

.get("AnalogTrigger", {}):

var data = steam.getAnalogActionData(handle, \

actions[action_set]["AnalogTrigger"][action])

if !data["active"]:

continue

# see https://partner.steamgames.com/doc/api/

# ISteamInput#EControllerSourceMode

if !(data["mode"] in [5, 7]):

# relative mode (joystick mouse)

state[action] = 0

state[action] += data["x"]

for action in actions[action_set].get("Button", {}):

var data = steam.getDigitalActionData(handle, \

actions[action_set]["Button"][action])

if !data["bActive"]:

continue

state[action] = data["bState"]

for action in state:

if state[action] != previous_state.get(action, null):

emit_signal("%s_changed" % action, state[action])

The biggest shortcoming of the set-up is to recognise subsequent controller changes. It simply doesn’t work.

First Prototypes

Controller Comparison

Different standard controllers available on the market were compared for their haptic feedback. This should make it possible to calibrate the strength of the controllers to each other in order to achieve the same strength on different hardware. For this purpose, one controller was selected as a reference. The strength was increased by about one tenth for each side. The perceived strength then had to be set accordingly on all other controllers.

A PS4 controller, PS5 controller, Switch Pro controller, Xbox 360 controller, Xbox One controller and a Steam controller were obtained for the test. However, the Steam Controller was eliminated from the test due to a lack of rumble feedback.

The prototype was written in Godot and uses the Steam input of the Steamworks SDK. This enabled a full control of the various controllers. For a short time, a prototype using SDL was also considered, but was then discarded due to the better iterability with the Steamworks SDK.

Figure 11: Illustration of the deviation of different controller vibrations of the right (weak) side to a PS 4 Pro controller in perceived strength.

Figure 12: Illustration of the deviation of different controllers of the perceived vibration of the left (strong) side.

The test was carried out with four people and is therefore of limited value. Only one of each controller type on the market was available. There was not enough capacity for a larger-scale test. In addition, the data from the individual test runs did not seem promising anyway, which is why the test was ended early.

The PS5 and Switch Pro controllers both use linear resonance actuators, whereas all other models use eccentric mass actuators. The different vibration systems naturally produce different sensations.

In general, the benefit of calibrating force feedback across types seems questionable. On one hand, one person only ever plays with a single controller at a time, and on the other hand, the feedback is always experienced relative to one’s own controller. More revealing are the comments on the equality of the similar levels. A differentiation in ten percent steps seems to be too detailed for the perception. This will be further investigated in the next sensitivity prototype.

Sensitivity prototype

The aim is to define a scale of the feedback strength that can be perceived by most people. In the test, the vibration strength increases gradually. As soon as an increase is noticed, this is confirmed. The vibration strength then remains at the same level for a random period of time before gradually rising again.

The prototype is based on the previous one with minor adjustments. Those tweaks took about 45 minutes to complete. The core of the old prototype is a wrapper around the Steam Input API, which serves as the base.

Figure 13: Photo of a typical test situation: A human is sitting in front of a computer, Godot with some UI is visible on the monitor, different controllers are on the table and one is being tested with.

This test did not reveal any clear results either. The maximum number of perceived levels was ten. There were also people who perceived only two to four steps. The variance is so wide that I did not pursue this test any further. I used four levels as a guide for the following prototypes.

Tools

In the process it became clear that there was a need for some kind of creation tool, as nothing of the sort existed in Godot. In Unreal there was a curve to control the strength. This was then my starting point.

For the builds of the projects using the Steam SDK and the input overrides, it is essential that the steam_appid.txt and the input files ending in .vdf are also copied to the build. Otherwise the hack will not work.

Curve Tool

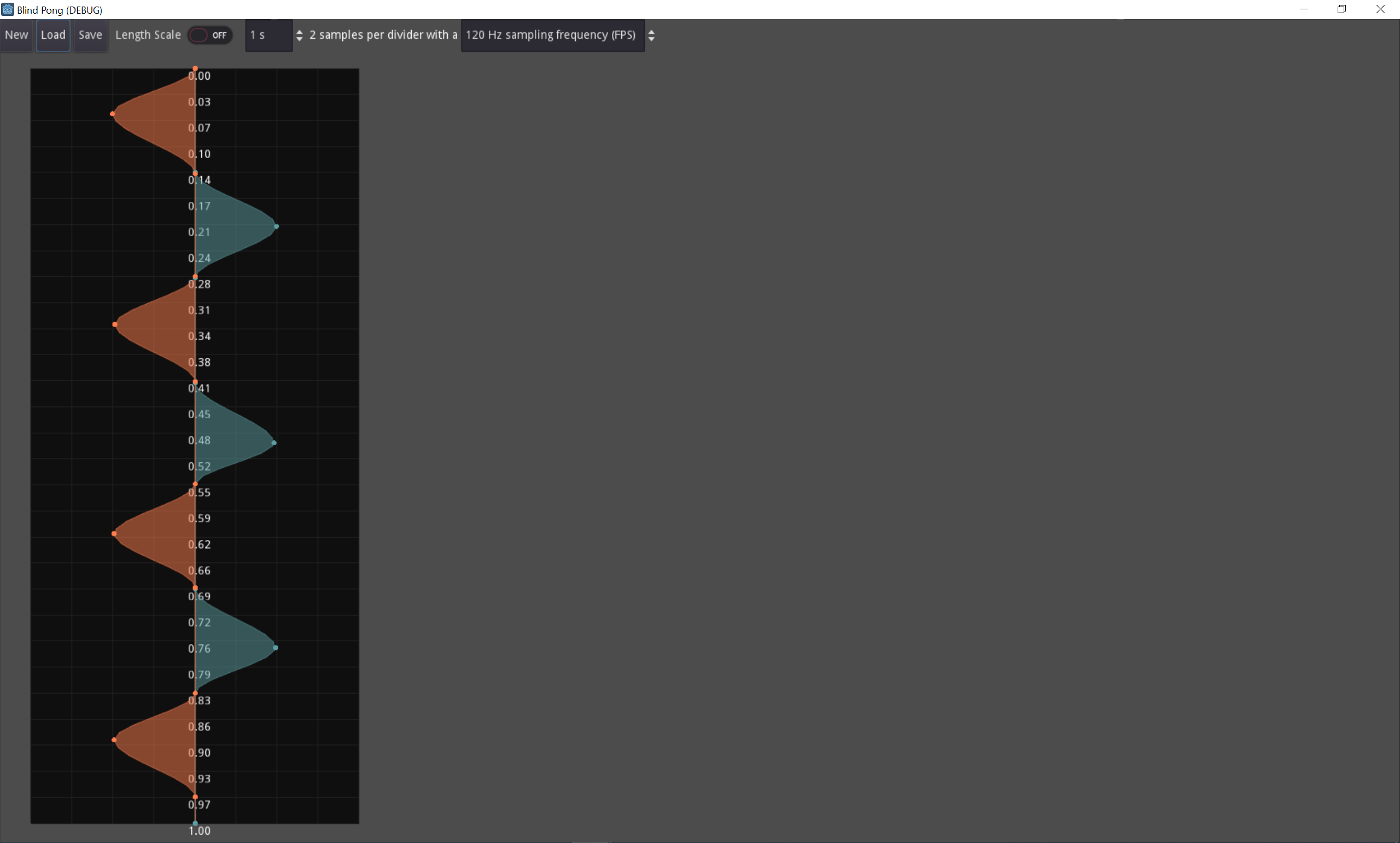

Figure 14: Screenshot of the curve tool. The weak side is coloured light blue and the strong side orange.

The idea was to plot the time on the x-axis and the strength on the y-axis in a classic way. There are two curves that overlap and show the strengths of the two motors. To be able to edit the curves, you need to be able to add, delete and move points, but you also need to be able to change points on the curve, as a new point is always placed closest to the next curve. I also wanted to be able to move multiple points at the same time. As a last feature, I also included that you can scale a selection you have made. This allows you to select a few points, which then remain relatively distant from each other, but it now takes more or less time.

The curves, together with their length, can be saved as a resource and played back at any time by pressing a button on the controller as active feedback or by pressing a button in the UI as passive feedback.

I then showed this tool to Stanislav, a UI/UX designer who is a friend of mine, and he gave me input on how to improve the usability, which then led to the subsequent signal tool. But the curve tool itself is still useful as an improved tool for the engine. I just need to find the time to integrate it into the engine or as an add-on.

Signal Tool

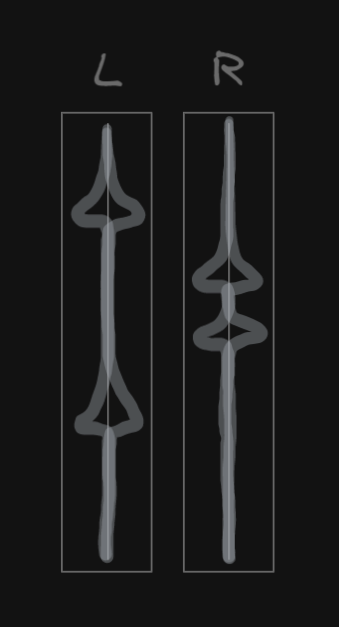

Figure 15: Concept by Stanislav for an improved usability of the tool.

The signal tool is basically a modification of the curve tool with swapped axes. The time is now on the y-axis and the strength on the x-axis. I also took the opportunity to move the left side of the vibration to the left and the right side to the right. This required some adjustments in the code regarding the movement especially with multiple points, but overall resulted in a much easier usability. Furthermore, I added that the scaling of the graph is dynamically adjusted to the size and the number of horizontal time separators corresponds to the theoretical sampling points of the curve, omitting a certain number of “partial underscores” for better readability. The sample rate is set to the tick rate of the engine and therefore the refresh rate is forced to 120 Hz for all prototypes. However, you can adjust this value in the tool and get a feel for how the effect feels at different sample rates. I also quartered the vertical axis, which also improved readability.

Figure 16: Screenshot of the signal tool with a loaded step effect.

More Prototypes

Signal Association

The aim of this prototype was to investigate how strongly haptic controller feedback triggers dominant associations or interpretations. For this purpose, I created ten different vibration effects with the signal tool. The effects could be played back by the testers as many times as they liked by pressing a controller key. All thoughts were to be vocalised; in particular how the feedback felt and what it was associated with, if there was an association. The effects could be played using both a UI button and a controller button. Originally, a test with 40 people in two groups was planned, with one group actively starting the effects and the other having them played. However, since not enough testers were found, only the active test with twenty people was carried out.

Figure 17: Illustration of the frequency of similar statements about a feedback effect. The noise is too high.

The detailed data can be found in the appendix. Roughly summarised, the picture is quite heterogeneous. There are no dominant associations, although there are partly similar descriptions, i.e. different people have similar associations.

Overall, two conclusions can be drawn. First, people seem to react with varying degrees of sensitivity to haptic feedback. However, the vibration remains widely interpretable. Second, the signals that can be generated by a controller are coarse and also abstract. Although they can be abstractly distinguished and especially compared, they do not contain enough information to replace other channels. One could possibly complement them with other information channels in order to better control associations.

Game Without Visuals (Blind Pong)

With this final prototype, the findings from the individual prototypes should be made experiencable in a playable application together with auditive feedback. The aim is to prove that a non-visual game can work, even though pong is of course a simple one. First, the individual components that are absolutely necessary for the system to function were determined. In the case of pong, this is primarily the ball or rather the position of the ball. It is also important to know where your own paddle is. The opponent’s paddle is initially less important, although the game is deprived of its strategic element if this information is missing. The position of the ball is indicated relative to the own paddle. Haptics and audio pan to the respective side. In addition, different loops are played depending on the direction. For a more concrete positioning, impacts are played on the spot. Since these also appear in Haptics and Sound, I had to adapt my controller boilerplate code to mix multiple effects. But this is just a simple max(effects).

This prototype was publicly exhibited at our HIVE FIVE. Interested people had the opportunity to grab a controller and headset and try out this pong version. The reactions were quite mixed. For example, there were some who couldn’t get to grips with the game at all and never hit the ball, and others who scored super fast and intuitively against the AI. Players also reported an intense flow state where their eyes looked into the void and their hands played almost mechanically.

Figure 18: Photo of a tester at the HIVE FIVE, the exhibition of the Game Design course at HTW Berlin where we show our semester projects.

Conclusion

Evaluation of the Workflow Design

In principle, the iterative structure of the work was a good decision. This made it possible to adapt the project bit by bit and to develop it further on the basis of the knowledge gained. However, this was also the biggest pitfall, which I did not anticipate. At times I was just waiting for people, especially to be able to do tests, and I didn’t know how the project would develop or what the next steps would be. This freedom in the project setup generated an uncertainty that I found paralysing, especially after the first three prototypes had not delivered encouraging results.

Conclusion with Final Assessment of the Project

First, let us take a look at the results and findings of the work. As mentioned at the beginning, there are two different types of vibration motors. These have very significant differences that lead to different perceptions of the same effect, depending on the design. There are the inertia problems of the ERMs in particular, but also the different vibration direction and strength. For example, the Dualsense is perceived by many as more comfortable, but the Xbox One controller as easier to read. One assumption suggests that this is due to the direction of the LRAs in the Dualsense, which stimulates the palm of the hand more indirectly than the ERMs, which means that although the vibration is felt, the origin of the vibration is lost and also some of the strength. However, this is only a theory and needs further investigation. For the current generation of controllers, I have worked with safetymargins of 50-150 ms within the effects.

Another key finding is that the different strength motors are a considerable disturbing factor. It seems difficult to impossible to quantise the differences between strong and weak motors. In the case of effects, one can counteract to a certain extent by selective testing, but the two motors will always feel different. All you can really do is try to avoid occlusion. This leads directly to the next point: people have different sensitivities. What some perceived as pleasant, others found unpleasant. However, the exact drive, which determines direction, strength and frequency, plays a significant role. This could possibly also lead to differences in strength being perceived differently or not at all. At the very least, this explains why it was not possible for me to match the controllers in terms of strength in order to carry out a software adjustment.

The feedback effects are abstract and can therefore be interpreted. The interpretation can be guided, through another information channel such as sound, for example. There does not seem to be an inherent or dominant interpretation of haptics, however the absence of evidence is not evidence of absence. This would need to be tested again, with a slightly modified design, to verify this statement. While strength sensitivity was not very pronounced in the tests, quite good differentiation was apparent. Again, this is only an incidental finding.

Especially with the last prototype, it became obvious that the haptic effect alone does not transmit enough information and that it requires another information channel to make the effect intuitive or even readable at all. In general, the information density seems to be limited.

In combination, however, haptics and audio seem sufficient to convey the necessary information for a game. However, the game system must be optimised for the reduced information bandwidth, as was done in the case of pong, for example. The public test at our fair also showed that a flowstate can be achieved regardless of the information channels, as long as the game system and its communication works.

The final, slightly more frustrating realisation is the poor engine support. This work was only made possible through the use of the Steam SDK, which in turn created other problems as I never intended to release the prototypes on Steam. Due to the use of the SDK, some boilerplate code accumulated, which I also make available as part of this work.

In addition to the Steam boilerplate, there are also the feedback and curve tools as standalone applications. The feedback effects could be made usable for other engines with the corresponding boilerplate. However, this was not the focus of my work.

It was disillusioning that the prototypes delivered rather unusable results. Actually, all the findings of this work are by-products or findings that emerged through trial and error. As a result, however, they have a high degree of uncertainty and are perhaps better understood as tentative guidelines. It would be nice if more was talked and documented about haptics and specific solutions, as this is a field where you really start all over again every time. I think the results can serve well as a guide for further projects, but the whole process took much more energy than expected and is appropriate given the results.

Throughout the project, some ideas for further tests or applications arose that were not pursued further for various reasons. Nevertheless, I would like to list them here in order to provide possible pointers for future experiments.

One central finding, as mentioned several times, is the abstractness of haptics. Here, a further test would have to be carried out to support this thesis. For example, a wide variety of interpretations could be given and testers would have to evaluate how well they fit.

Another aspect is the perception of strengths. Here, too, a test would be necessary in which, for example, different strengths are compared with each other in order to approach a scale. In addition, further research is possible with regard to personal perceptions, especially how to optimise the effects into a pleasant and easily perceivable range.

One problem with this pong was the lack of temporal information or lack of information about opponent behaviour. This information is missing for the same reason that I didn’t pursue the idea of building an endless runner without visuals. I don’t know if it is even possible to create a time anticipation with haptics, as they are rather close to the body, but it would definitely be worth a try. For example, forces of nature could serve as inspiration, although these are highly contextual. Alternatively, certain signals could certainly be taught as clues through the game in a tutorial, for instance. Building on this, further exploration of gameplay would also be possible, the information content could be well communicated with haptics and audio.

Since haptics are strongly dependent on the hardware, there are further starting points here. For one, the adaptive triggers of the Dual Sense were completely left out of this work, and there was no time for spatial audio. Spatial audio is particularly interesting for VR, where “seeing nothing” is probably even more intuitive than having a screen in front of you on which nothing happens. You could benefit from sound and spatial input in VR while still having motion controllers that also have force feedback.

Last but not least, a store- and platform-independent library that provides both input and output for the most common controllers would be useful.

Acknowledgements

I would like to sincerely thank Prof. Thomas Bremer for the ongoing feedback and encouragement. To Prof. Susanne Brandhorst for the pointers on the layout for this book. I would also like to thank Stanislav Akimushkin from the bottom of my heart for taking the time for me, despite the many power cuts, and thereby steering me back on the right track. I would also like to thank everyone who put up with my prototypes and gave me valuable input through testing. Thanks to

- Achi Caravetta,

- Anita Franz,

- Christof Seelisch,

- Daria Pankau,

- David Cafisso,

- David Witzgall,

- Emma Louise Steiner,

- Fea Schirmacher,

- Fil Borgmann,

- Hana Hong,

- Hana Rensch,

- Jay Oberfeld,

- Jules Pommier,

- Konstantin Knapp,

- Leon Fell,

- Leon Schurer,

- Lucas Thieme,

- Matheus Zacharska,

- Meike Strippel,

- Morten Newe,

- Namin Hansen,

- Robert Wegener,

- Sina Behrend,

- Viktor Gjorgjeski

and everyone who gave me feedback and I didn’t have in a test list.

Downloads

Builds: Controller Compare Sensitivity Signal Association Blind Pong

Sources: Controller Compare Sensitivity Signal Association Blind Pong

Data: Signal Association Data Controller Comparision Data (and some sensitivity data)